I've finally got something for post.

Last month I've been playing with the TI MSP430 Launchpad and when I work with ADC it lacks of visualization. Since Launchpad have UART-USB interface, I decided to plot incoming data.

I'm using MSP430G2553, and all code was written for this controller.

Firmware

Firmware of controller is pretty straightforward in large scale: it just sends value from ADC to the UART continously - with one note: before start to send anything, we need to make a "handshake" - receive start symbol from computer. So, high-level algorithm will be like this:

1) Initialize UART[1] (9600 baud) and ADC[2]

2) Wait for start signal ("handshake")

3) In forever loop send temperature to UART

ADC initialization to read temperature (10 channel):

void ADC_init(void) {

ADC10CTL0 = SREF_1 + REFON + ADC10ON + ADC10SHT_3;

ADC10CTL1 = INCH_10 + ADC10DIV_3;

}

int getTemperatureCelsius()

{

int t = 0;

__delay_cycles(1000); // Not neccessary.

ADC10CTL0 |= ENC + ADC10SC;

while (ADC10CTL1 & BUSY);

t = ADC10MEM;

ADC10CTL0 &=~ ENC;

return(int) ((t * 27069L - 18169625L) >> 16); // magic conversion to Celsius

}

Handshake:

// UART Handshake...

unsigned char c;

while ((c = uart_getc()) != '1');

uart_puts((char *)"\nOK\n");

We're waiting for '1' and sending "OK" when we receive it.

After that, program starts to send temperature indefinitely:

while(1) {

uart_printf("%i\n", getTemperatureCelsius());

P1OUT ^= 0x1;

}

uart_printf converts integer value into string and send over UART [3].

The source code of firmware in the bottom of this post.

Plotting Application

I love

matplotlib in python, it's great library to plot everything.To read data from UART, I used

pySerial library. That's all we need.

When we connect launchpad to computer, device /dev/ttyACM0 is created. It's serial port which we need to use.

Application consists of two threads:

- Serial port processing

- Continous plot updating

Serial port processing

Let's define global variable

data = deque(0 for _ in range(5000)), it will contain data to plot and

dataP = deque(0 for _ in range(5000)), it will contain approximated values.

In the serial port thread, we need to open connection:

ser = serial.Serial('/dev/ttyACM0', 9600, timeout=1)

then, make a "handshake":

ok = b''

while ok.strip() != b'OK':

ser.write(b"1")

ok = ser.readline()

print("Handshake OK!\n")

As you see, we're waiting the "OK" in response to "1". After "handshake", we can start reading data:

while True:

try:

val = int(ser.readline().strip())

addValue(val)

except ValueError:

pass

UART is not very stable, so sometimes you can receive distorted data. That's why I eat exceptions.

addValue here is function that processes data and puts it to

data variable:

avg = 0

def addValue(val):

global avg

data.append(val)

data.popleft()

avg = avg + 0.1 * (val - avg)

dataP.append(avg)

dataP.popleft()

Also it calculates weighted moving average:

Continous plot updating

First, let's create figure with two plots:

fig, (p1, p2) = plt.subplots(2, 1)

plot_data, = p1.plot(data, animated=True)

plot_processed, = p2.plot(data, animated=True)

p1.set_ylim(0, 100) # y limits

p2.set_ylim(0, 100)

To draw animated plot, we need to define function that will update data:

def animate(i):

plot_data.set_ydata(data)

plot_data.set_xdata(range(len(data)))

plot_processed.set_ydata(dataP)

plot_processed.set_xdata(range(len(dataP)))

return [plot_data, plot_processed]

ani = animation.FuncAnimation(fig, animate, range(10000),

interval=50, blit=True)

And show the plot window:

plt.show()

Here's the result of program's work:

On the first plot it's raw data received through serial port, on the second it's average.

Source codes

Desktop live plotting application:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

| import matplotlib.pyplot as plt

import matplotlib.animation as animation

import serial

import threading

from collections import deque

data = deque(0 for _ in range(5000))

dataP = deque(0 for _ in range(5000))

avg = 0

def addValue(val):

global avg

data.append(val)

data.popleft()

avg = avg + 0.1 * (val - avg)

dataP.append(avg)

dataP.popleft()

def msp430():

print("Connecting...")

ser = serial.Serial('/dev/ttyACM0', 9600, timeout=1)

print("Connected!")

# Handshake...

ok = b''

while ok.strip() != b'OK':

ser.write(b"1")

ok = ser.readline()

print(ok.strip())

print("Handshake OK!\n")

while True:

try:

val = int(ser.readline().strip())

addValue(val)

print(val)

except ValueError:

pass

if __name__ == "__main__":

threading.Thread(target=msp430).start()

fig, (p1, p2) = plt.subplots(2, 1)

plot_data, = p1.plot(data, animated=True)

plot_processed, = p2.plot(data, animated=True)

p1.set_ylim(0, 100)

p2.set_ylim(0, 100)

def animate(i):

plot_data.set_ydata(data)

plot_data.set_xdata(range(len(data)))

plot_processed.set_ydata(dataP)

plot_processed.set_xdata(range(len(dataP)))

return [plot_data, plot_processed]

ani = animation.FuncAnimation(fig, animate, range(10000),

interval=50, blit=True)

plt.show()

|

MSP430 full firmware:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

| /*

NOTICE

Used code or got an idea from:

UART: Stefan Wendler - http://gpio.kaltpost.de/?page_id=972

ADC: http://indiantinker.wordpress.com/2012/12/13/tutorial-using-the-internal-temperature-sensor-on-a-msp430/

printf: http://forum.43oh.com/topic/1289-tiny-printf-c-version/

*/

#include <msp430g2553.h>

// =========== HEADERS ===============

// UART

void uart_init(void);

void uart_set_rx_isr_ptr(void (*isr_ptr)(unsigned char c));

unsigned char uart_getc();

void uart_putc(unsigned char c);

void uart_puts(const char *str);

void uart_printf(char *, ...);

// ADC

void ADC_init(void);

// =========== /HEADERS ===============

// Trigger on received character

void uart_rx_isr(unsigned char c) {

P1OUT ^= 0x40;

}

int main(void)

{

WDTCTL = WDTPW + WDTHOLD;

BCSCTL1 = CALBC1_8MHZ; //Set DCO to 8Mhz

DCOCTL = CALDCO_8MHZ; //Set DCO to 8Mhz

P1DIR = 0xff;

P1OUT = 0x1;

ADC_init();

uart_init();

uart_set_rx_isr_ptr(uart_rx_isr);

__bis_SR_register(GIE); // global interrupt enable

// UART Handshake...

unsigned char c;

while ((c = uart_getc()) != '1');

uart_puts((char *)"\nOK\n");

ADC10CTL0 |= ADC10SC;

while(1) {

uart_printf("%i\n", getTemperatureCelsius());

P1OUT ^= 0x1;

}

}

// ========================================================

// ADC configured to read temperature

void ADC_init(void) {

ADC10CTL0 = SREF_1 + REFON + ADC10ON + ADC10SHT_3;

ADC10CTL1 = INCH_10 + ADC10DIV_3;

}

int getTemperatureCelsius()

{

int t = 0;

__delay_cycles(1000);

ADC10CTL0 |= ENC + ADC10SC;

while (ADC10CTL1 & BUSY);

t = ADC10MEM;

ADC10CTL0 &=~ ENC;

return(int) ((t * 27069L - 18169625L) >> 16);

}

// ========================================================

// UART

#include <legacymsp430.h>

#define RXD BIT1

#define TXD BIT2

/**

* Callback handler for receive

*/

void (*uart_rx_isr_ptr)(unsigned char c);

void uart_init(void)

{

uart_set_rx_isr_ptr(0L);

P1SEL = RXD + TXD;

P1SEL2 = RXD + TXD;

UCA0CTL1 |= UCSSEL_2; //SMCLK

//8,000,000Hz, 9600Baud, UCBRx=52, UCBRSx=0, UCBRFx=1

UCA0BR0 = 52; //8MHz, OSC16, 9600

UCA0BR1 = 0; //((8MHz/9600)/16) = 52.08333

UCA0MCTL = 0x10|UCOS16; //UCBRFx=1,UCBRSx=0, UCOS16=1

UCA0CTL1 &= ~UCSWRST; //USCI state machine

IE2 |= UCA0RXIE; // Enable USCI_A0 RX interrupt

}

void uart_set_rx_isr_ptr(void (*isr_ptr)(unsigned char c))

{

uart_rx_isr_ptr = isr_ptr;

}

unsigned char uart_getc()

{

while (!(IFG2&UCA0RXIFG)); // USCI_A0 RX buffer ready?

return UCA0RXBUF;

}

void uart_putc(unsigned char c)

{

while (!(IFG2&UCA0TXIFG)); // USCI_A0 TX buffer ready?

UCA0TXBUF = c; // TX

}

void uart_puts(const char *str)

{

while(*str) uart_putc(*str++);

}

interrupt(USCIAB0RX_VECTOR) USCI0RX_ISR(void)

{

if(uart_rx_isr_ptr != 0L) {

(uart_rx_isr_ptr)(UCA0RXBUF);

}

}

// ========================================================

// UART PRINTF

#include "stdarg.h"

static const unsigned long dv[] = {

// 4294967296 // 32 bit unsigned max

1000000000, // +0

100000000, // +1

10000000, // +2

1000000, // +3

100000, // +4

// 65535 // 16 bit unsigned max

10000, // +5

1000, // +6

100, // +7

10, // +8

1, // +9

};

static void xtoa(unsigned long x, const unsigned long *dp)

{

char c;

unsigned long d;

if(x) {

while(x < *dp) ++dp;

do {

d = *dp++;

c = '0';

while(x >= d) ++c, x -= d;

uart_putc(c);

} while(!(d & 1));

} else

uart_putc('0');

}

static void puth(unsigned n)

{

static const char hex[16] = { '0','1','2','3','4','5','6','7','8','9','A','B','C','D','E','F'};

uart_putc(hex[n & 15]);

}

void uart_printf(char *format, ...)

{

char c;

int i;

long n;

va_list a;

va_start(a, format);

while(c = *format++) {

if(c == '%') {

switch(c = *format++) {

case 's': // String

uart_puts(va_arg(a, char*));

break;

case 'c': // Char

uart_putc(va_arg(a, char));

break;

case 'i': // 16 bit Integer

case 'u': // 16 bit Unsigned

i = va_arg(a, int);

if(c == 'i' && i < 0) i = -i, uart_putc('-');

xtoa((unsigned)i, dv + 5);

break;

case 'l': // 32 bit Long

case 'n': // 32 bit uNsigned loNg

n = va_arg(a, long);

if(c == 'l' && n < 0) n = -n, uart_putc('-');

xtoa((unsigned long)n, dv);

break;

case 'x': // 16 bit heXadecimal

i = va_arg(a, int);

puth(i >> 12);

puth(i >> 8);

puth(i >> 4);

puth(i);

break;

case 0: return;

default: goto bad_fmt;

}

} else

bad_fmt: uart_putc(c);

}

va_end(a);

}

|

Sources

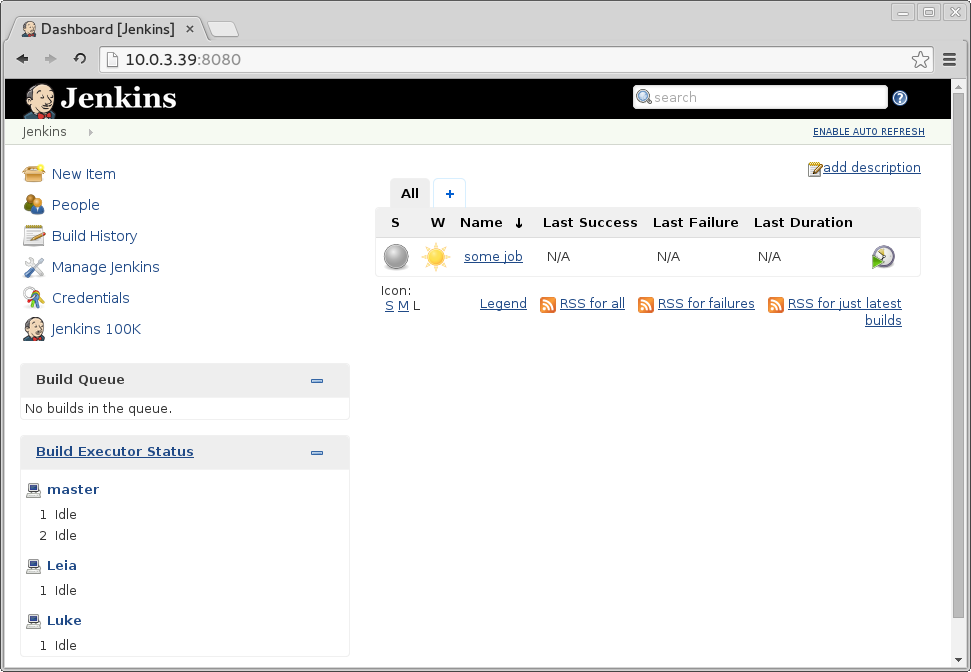

Anyway, installing jenkins on host machine and using Docker as slaves is useful, but can clog your computer with garbage files that hard to cleanup. DJS2 offers you isolated environment (LXC container) where your Jenkins and all Docker slaves are executed, and only jenkins data folder with jobs is stored on your computer.

Anyway, installing jenkins on host machine and using Docker as slaves is useful, but can clog your computer with garbage files that hard to cleanup. DJS2 offers you isolated environment (LXC container) where your Jenkins and all Docker slaves are executed, and only jenkins data folder with jobs is stored on your computer. It's really easy to deploy vagrant with different OS slaves on your local machine. Now CentOS 6 and CentOS 7 only available to use, but eventually all supported OSs will be migrated (as CentOS 5, Suse, Debian).

It's really easy to deploy vagrant with different OS slaves on your local machine. Now CentOS 6 and CentOS 7 only available to use, but eventually all supported OSs will be migrated (as CentOS 5, Suse, Debian).